The true cost of a private LLM

TL;DR: Deploying and hosting a private model is incredibly expensive if you're doing anything more than sending a few simple tokens for basic requests. This option should only be considered for organizations that are willing to pay the price for the privacy peace of mind.

If you're building or selling a B2B product in the AI space, you've probably heard some flavor of this question:

"But how do I trust the AI? We have sensitive data and we don't want the AI to be trained on it."

We certainly have. At Fabi.ai, this question has landed in our laps countless times, especially from large enterprises in highly regulated industries like finance and healthcare. After diving deep into this challenge, we now offer private LLMs to our most privacy-focused enterprise customers.

But if we cut to the chase—this solution comes with serious trade-offs: it's expensive (like, really expensive) and the performance just doesn't come close to the state-of-the-art of what the big commercial providers offer.

I'm writing this post to share what we've learned so you don't have to stumble through the same obstacles we did. I'll walk you through the considerations, costs, and where I think this whole market is heading.

Quick disclaimer: We're data analytics folks, not AI infrastructure wizards. Our experience comes from building products that integrate with LLM provider APIs. And given how fast AI is evolving, some of what I'm sharing might be outdated in a few months (or weeks?). If you've found better solutions or frameworks we should look into, I'd love to chat—drop us a line at hello@fabi.ai. And a quick plug for a fairly vibrant community that talks local LLMs: r/LocalLlaMA.

Why build a private LLM?

You probably already know the answer if you're reading this, but here's the heart of it: Companies in regulated industries are legitimately concerned that using models from OpenAI, Anthropic, or Google puts their data at risk. The nightmare scenario haunting their compliance teams goes something like this: "What if someone else using that same LLM asks 'What are Acme's forecasted earnings for this quarter?' and gets our confidential data?"

For many organizations, this isn't just paranoia—it's a genuine regulatory concern that can't be brushed aside.

Our LLM selection process and requirements

Before I dive into models and costs, let me share some context about how we use AI in our product. This matters because your use case might lead you down a completely different path:

- We use an agentic approach: Our system functions like a smart assistant that takes multiple steps to solve problems. This means our token usage isn't just one prompt in, one response out—the context window grows dynamically based on what the user is asking.

- We need function calling: Not all models support this feature out of the box. For example, Llama-3.3-70b can do it, but Gemma-3-27b-it can't.

- Our usage comes in bursts: Typical user patterns involve concentrated activity for a few hours, with questions coming roughly every 3 minutes from a single user during peak times. Sometimes we'll have multiple users on a same team active simultaneously, but it's not constant.

- Speed matters: Our users expect answers within 5-30 seconds, depending on how complex their question is.

Based on these needs, we narrowed our selection criteria to:

- Must support function calling

- Must be open source for private deployment

- Must handle our somewhat spiky usage patterns

- Must be fast enough and smart enough to keep customers happy

Now that we have the requirements set, let’s dive in.

The cost of running an LLM

Basics of LLM

Before diving right into options and cost, it’s worth talking about the different parameters that come with LLMs models, what they mean and how they relate to cost.

- Large models ~= better accuracy: This isn’t absolute, but as a rule of thumb the higher accuracy and reliability you can expect. This is changing rapidly and their are some smaller models that are performing as good or better than larger models, but give two models released around the same time, you can generally expect that larger means better.

- LLM memory footprint is one of the largest cost factors:

- Larger number of parameters generally means larger memory footprint (file size). Putting inference aside, you need to simply be able to start by deploying the models on your machine, and if you have insufficient storage you’ll be blocked right out of the gate.

- Quantification: How each model is represented, whether with a 2 bytes float, 1 byte or 4 bit can impact both memory footprint and accuracy.

- O(n^2) space complexity for context window: larger context windows exponentially increase the demand for more compute. Agents are gluttons for context tokens, so the larger the better.

- GPUs are a must-have: You can find machines that have sufficient memory and storage to store the model and run it, but that doesn’t mean it will be functional for your end-users. As of the writing of this post, the model we opted in for emits 46 tokens per second, while that same model on a different machine that doesn’t have GPUs emits 3 tokens per second.

Options and cost breakdown

After sifting through options, we focused on these models:

- Qwen-2.5-32b-coder-instruct

- QwQ-32b

- Llama-3.3-70b (since writing this post, we could consider Llama-4 scout)

- Deepseek-v3-0324-671B

Here's where reality hits hard. The first thing to consider is how much memory these models need:

Qwen-2.5-32b & QwQ-32b

- Model File Size: ~70GB

- Required AWS Instance: g5.12xlarge (4x NVIDIA A10G GPUs)

- Hourly Cost: $5.76 😕

- Annual Cost (24/7): $50,370

Llama-3.3-70b

- Model File Size: ~150GB

- Required AWS Instance: p4d.24xlarge (8x NVIDIA A100 GPUs)

- Hourly Cost: $32.77 😨

- Annual Cost (24/7): $287,065

Deepseek-v3-0324-671B

- Model File Size: ~700GB

- Required AWS Instance: Multiple specialized instances

- Hourly Cost: Didn’t even consider after we looked at the first two models 😭

- Annual Cost (24/7): –

Yes, you read that right. Running Llama 3.3 70B 24/7 will set you back nearly $300K a year. And that's just the baseline.

Sure, there are clever ways to optimize these costs. You can split models across machines or use quantization techniques. But these numbers should give you a realistic starting point.

And here's the real kicker—Livebench benchmarks show that Gemini 2.5 Pro (which you can use for pennies) scores 85.87 on coding tasks, while Qwen-2.5-coder-32b-instruct scores only 56.85. That's a 51% performance advantage for the commercial model compared to a solution costing you $50K annually to run privately!

In general, model size and specialized training correlate with code accuracy. A 30B-ish specialized code model (Code Llama 34B, etc.) will get ~50–55% on easy coding tasks, whereas a 7B model is around 20–30%. New techniques (MoE, better data) can shift this curve, as seen with Mixtral and DeepSeek managing near-GPT3.5 performance at smaller scales. Choosing a model involves balancing this accuracy need with cost, as we examine next.

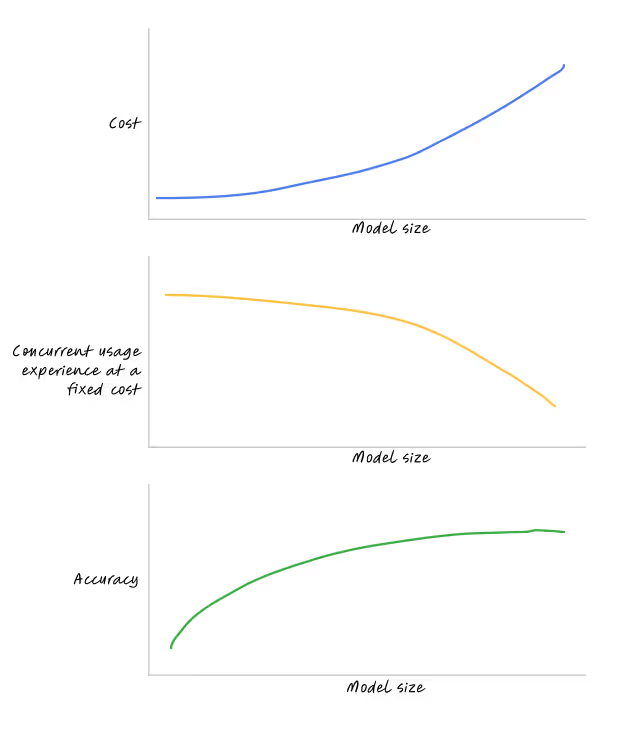

At this stage, it’s clear that generally larger models are more accurate and more expensive to run. What about concurrency?

Balancing accuracy and concurrent usage

So far we’ve been talking about the cost and performance of the model without any consideration for concurrent usage. If you’re running a model on a single GPU, can you have any number of users calling that model? Not quite…

First, let’s get specific about what we mean by concurrency: you may have multiple users using your product at the same time, but concurrency in this scenario means that users are literally engaging the AI at the exact same time. If a typical response takes 5 seconds and users ask a question 15 seconds apart, they’re not concurrently using the LLM.

Given that, the faster the model responds, the lower the risk of true concurrency or long queues. Smaller models (2.7B-7B parameters) excel at speed, generating responses 4-5 times faster than their larger counterparts. On a standard A10G GPU, these small models can produce 20-50 tokens per second, making them highly responsive even under load. Conversely, larger models (30B+ parameters) offer superior reasoning and capabilities but at a significant speed cost. With the same hardware, they typically manage only 5-10 tokens per second, creating potential bottlenecks when serving multiple users simultaneously.

What this means in practice is that for light usage (2-3 concurrent users): larger models can maintain acceptable performance on a single GPU. Requests will process sequentially with minimal wait times, creating a smooth experience for small teams. On the other hand for moderate usage (5-10 concurrent users), you’ll find that smaller models still offer the best experience all things being equal.

This chart is by no means based on real data, but it illustrates the tradeoff between cost, accuracy and concurrency.

I won’t sugarcoat it: finding the right balance is a lot of work and I don’t think we’ve found it yet for ourselves. We’re still at the beginning of our journey and there are likely a number of ways to improve what we’ve built on so far.

Making private LLMs less painful

We've tried (and are still experimenting with) several approaches to make this more economically viable:

1. Part-time deployment

Instead of keeping the servers running 24/7, consider spinning them up only when needed. Most models take just a few minutes to initialize. If your users can wait briefly or if you can predict when they'll need the system, you might turn a six-figure annual cost into something much more manageable.

2. Smart usage management

Be clever about how you handle user requests:

- Add progress indicators so users know something's happening

- Implement a queue system during peak times

- Create a UI that feels responsive even when the backend is chugging away

3. Better prompt engineering

One big factor in both cost and performance is context window size. Getting smarter about how you structure prompts can help:

- Only retrieve the most relevant information for each query

- Break large documents into chunks

- Use summarization to condense intermediate results

Prompt engineering may not actually just be a cost optimization question, it may simply be a necessity. For example we’ve found that Qwen-2.5-32b code interpreter is extremely chatty. Putting aside how distracting this is for the user, it outputs so many tokens that it leaves little room for the prompt and can quickly saturate the context window.

4. Play the waiting game

The AI landscape is changing at breakneck speed:

- Models are getting better AND smaller (remember how GPT-2 was state-of-the-art not that long ago?)

- Computing costs will likely drop as competition increases

Some of the challenges you're facing today might solve themselves in 6-24 months if you can afford to wait.

So... Is it worth it?

As with most things in life: it depends entirely on your situation.

If you're selling to highly regulated healthcare organizations for a high six figure ACV with strict data policies, then yes, a private LLM might be essential despite the costs and you can likely pass the cost on to the customer.

If you're building a consumer app with thousands of users paying $5/month and interacting with the LLM every few minutes, then no, the economics probably won’t work out for you with today's technology.

The current reality is that major LLMs providers are some of the best funded companies in history and they’re in an arms race to produce THE model that everyone will use. As consumers and integrators of these LLMs solutions, we’re in a position to hugely benefit from this clash of Titans. Paying a few cents on the dollar per million token for the best AI we’ve ever had is hard to beat and we know that it’s coming at the cost of exorbitant burn rates from these providers. Is this sustainable and will these LLM providers figure out how to make the dollars and cents add up? Only time will tell.

What I tell our customers

When customers bring up this question, here's what I usually tell them:

"The extra cost—both in dollars and reduced performance—is essentially what you're paying for in privacy and independence from major LLM providers. That's a perfectly valid choice for many businesses, but you should go in with eyes wide open about what that trade-off means."

Interestingly, we're seeing more large enterprises adopt policies that specifically approve certain AI providers rather than banning external AI altogether. This is one reason we've designed our product to remain LLM-agnostic.

Where this is all heading

Looking ahead, I believe most organizations will eventually settle on an LLM provider they trust, with appropriate data handling agreements. From conversations with data leaders across industries, it’s becoming clear that organizations that aren’t adopting AI are falling behind their competitors and that pressure is causing IT and security teams to think carefully about their stance. Meanwhile, highly privacy-sensitive industries will pay premium prices for specialized solutions built specifically for their needs and that will likely always be the case.

That said, if we take the most extreme example of a privacy minded organization - government agencies - in the future will they pay a steep premium for individual providers to come with their own models or will they simply partner with major LLM providers to host their own models? My intuition tells me that these organizations will likely want a Bring Your Own Model approach expecting vendors to integrate with their model.

Have you been wrestling with similar decisions? Found solutions we haven't mentioned? I'd love to compare notes—reach out at hello@fabi.ai.

.svg)